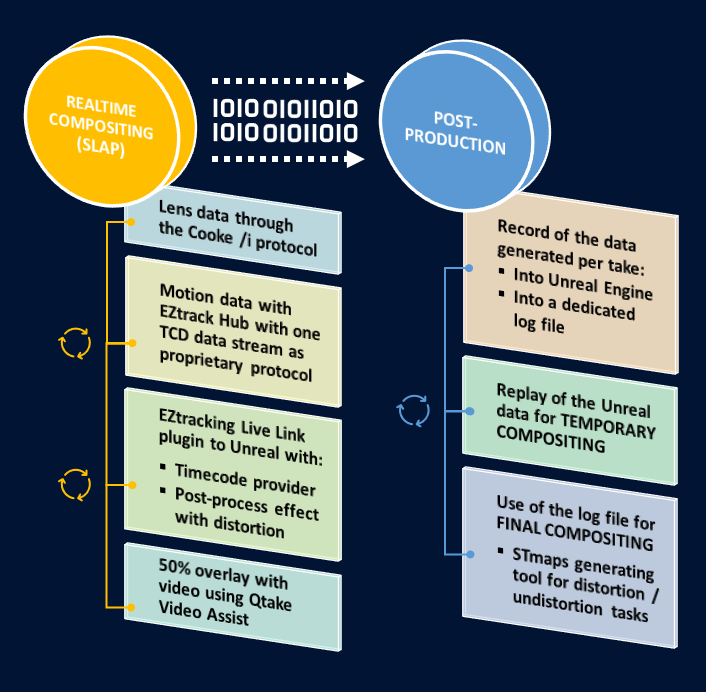

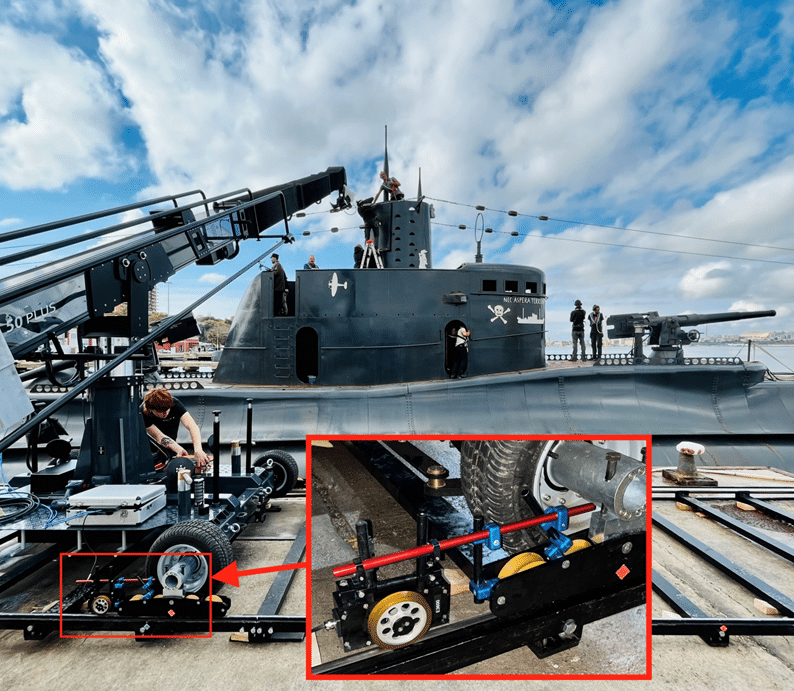

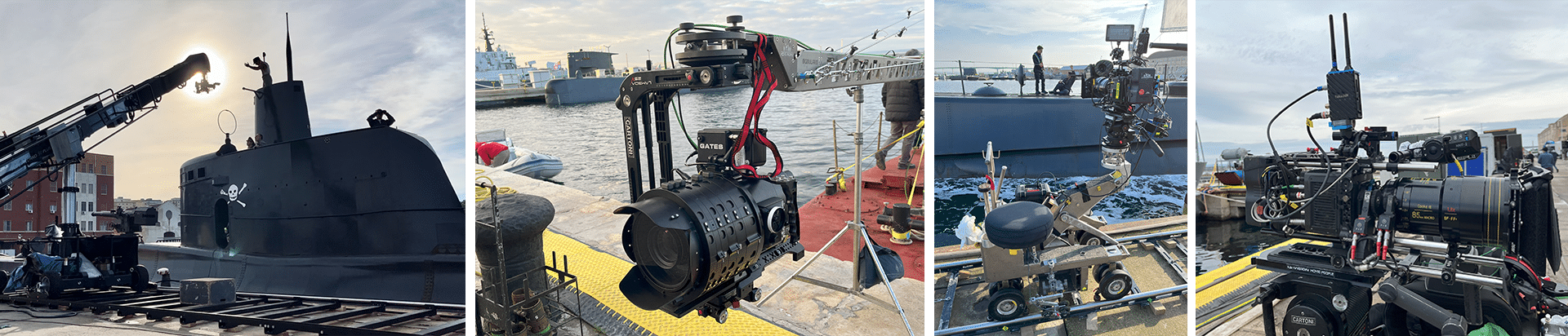

The NRT for Near Real Time pipeline is a concept being engineered by David STUMP and Kevin HAUG for production of the Comandante movie.

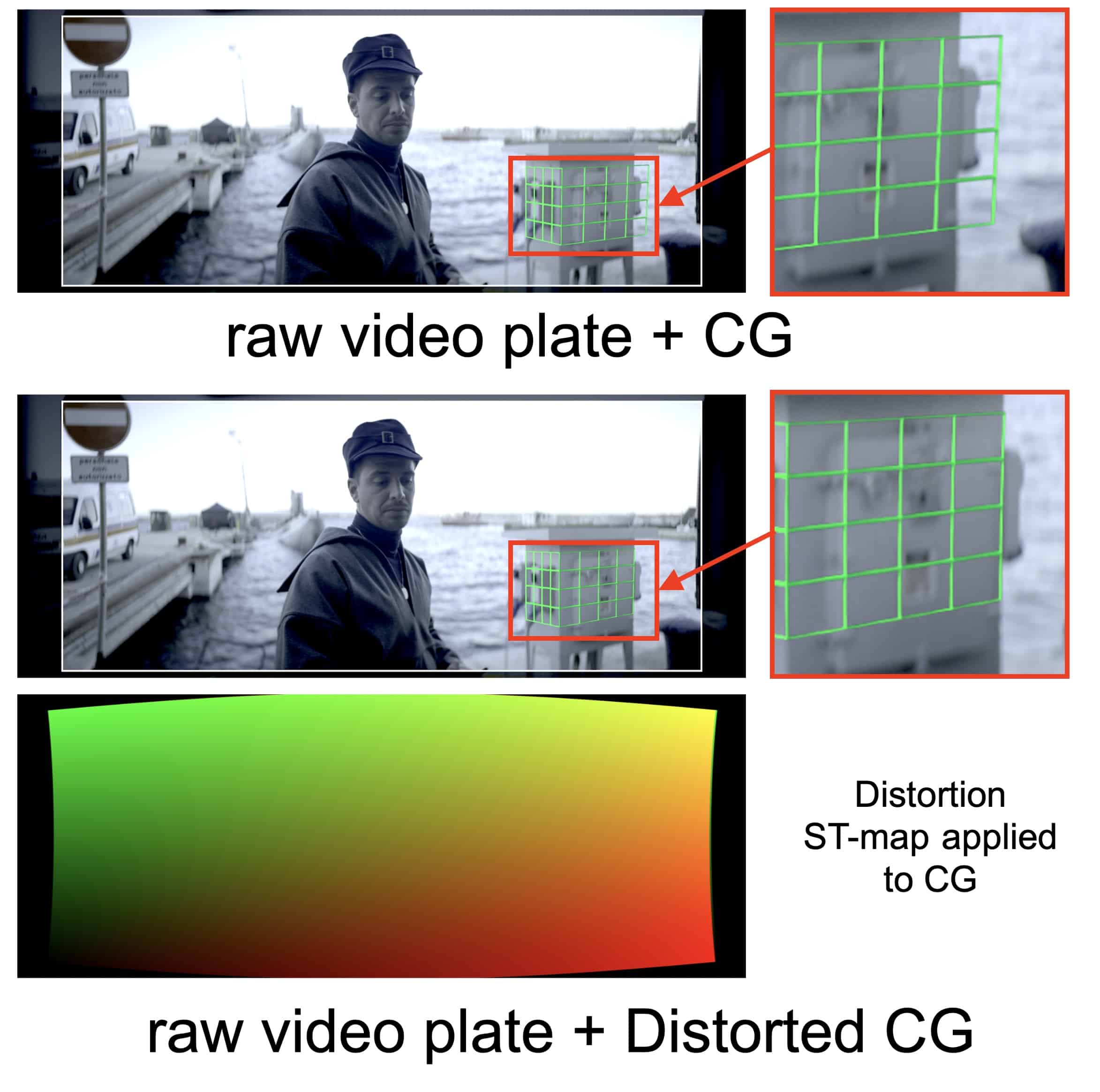

The workflow primarily aims at bringing together key advancements in lens calibration, machine learning and real-time rendering to deliver higher quality composites of what was just shot to the filmmakers, in a matter of minutes.

The NRT pipeline involves several technologies blended together: EZtrack is one of them as our tracking system has been combined to our expertise in the lens metadata.