THE BORDEAUX-BASED COMPANY FEATURES ITS LATEST INNOVATIONS FOR CAMERA TRACKING AND LENS CALIBRATION!

Established in the heart of Bordeaux, France, oARo SAS develops camera tracking and lens profiling solutions for real-time and live productions. Specialized in image computing innovations for the Augmented Reality, the company provides both tools and tailored services aiming at demanding studio creatives and DOPs that are looking for flexible tools to achieve their virtual productions. Stéphane Delouche, EZtrack Head of Sales and Virtual Productions Manager presents his company’s latest innovations.

Author: Alexia de Mari | MEDIAKWEST #47 JUNE – JULY – AUGUST 2022

How oARo is arrived in the tracking and real time market?

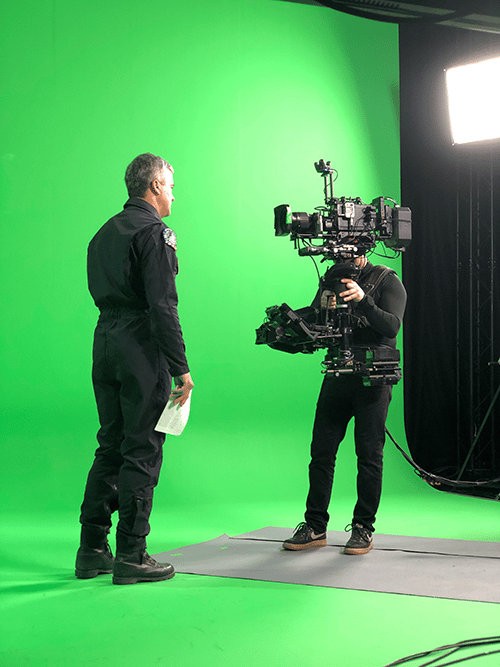

SolidAnim, a small independent French studio founded in 2007 and specializing in 3D animation and motion capture, had entered the niche of real-time on-set 3D previsualization with its first tracking solution in 2014, called SolidTrack. This depth camera scanned a real space in real time and analyzed numerous points using distance algorithms to determine camera movements in real time. At the end of 2021, SolidAnim founded oARo to position its subsidiary as a specialist in camera tracking innovations and services dedicated to on-set virtual production. It was against a backdrop of simplified equipment and a change in target clientele that we developed a new tracking tool: EZtrack. The technology used is entirely developed in Bordeaux by our R&D team, with the unique feature of being able to be easily and rapidly deployed on any cyclorama green screen or Studio xR with latest-generation Led walls – and on any type of camera type of camera: shoulder-mounted Steadicam, crane, PTZ…

How does EZtrack work?

Our EZtrack system is the only optical tracking solution to offer an environment and workflow that are both scalable and open. The heart of the system is a small central unit which is the nerve center of tracking operations. A veritable electronic hub, it can interface with different types of position sensor to track not only studio cameras, but also virtual objects and people, actors, or talent on set, in real time. Our technology is particularly adaptive, with the possibility of interchanging tracking options. The system works on green screen cyclorama as well as Led walls.

Our team has adapted Valve’s Lighthouse technology to ensure accurate and stable camera tracking without having to sacrifice the cost of access to the technology. Proof of the system’s openness is the support of another technology currently very much in vogue with virtual studio teams: Antilatency technology, which works using active infrared markers that users can position on the floor or ceiling, on their grids. These markers thus generate infrared, and a tiny sensor – called the “Alt” tracker – picks up the infrared information emitted by these markers collects the infrared information emitted by these markers. As a hub, EZtrack can interface with these sensors and other technologies, notably motion capture cameras such as Vicon and OptiTrack.

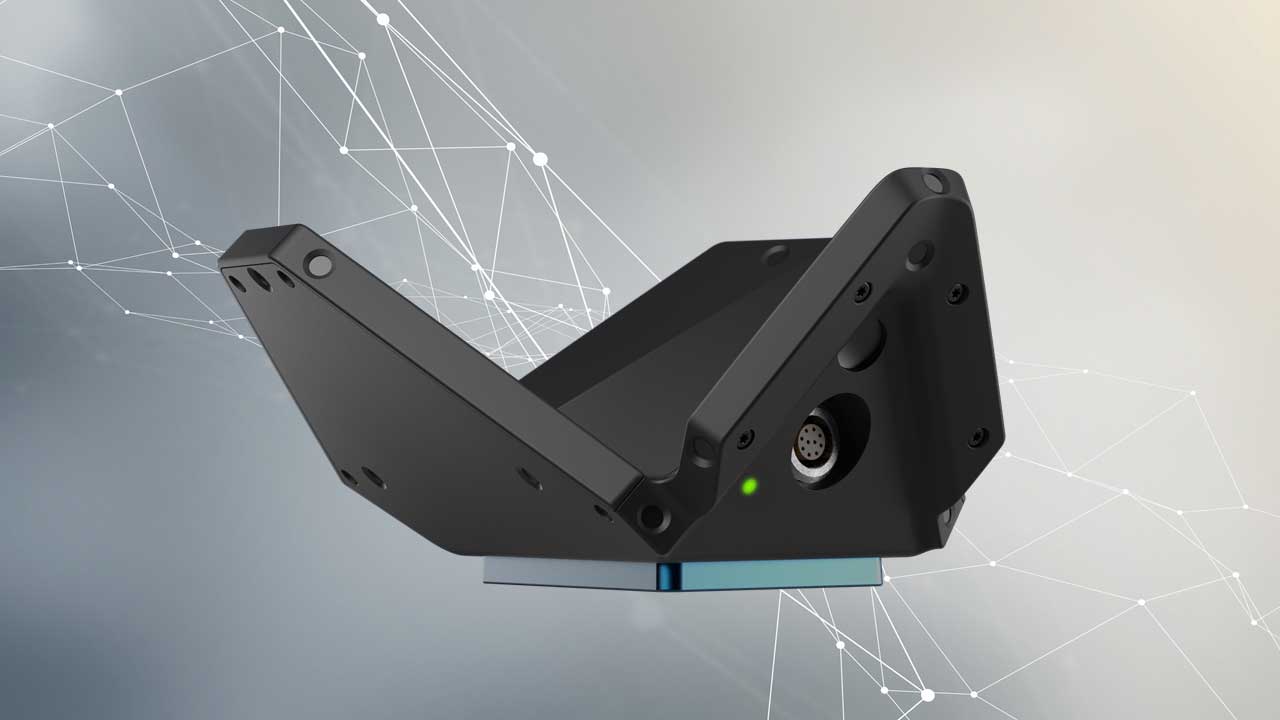

What will the new GEN.2 brings to the EZtrack Hub?

Firstly, a quite reduced size of the embedded electronics inside has enabled us to half-reduce the dimensions of the EZtrack Hub therefore its overall form factor. This is something we have been asked to improve by existing EZtrack users, thus, especially in the context of shoulder-mounted or hand-held camera types.

Cameramen like to have lightweight equipment that won’t get in their way. The idea is to place the EZtrack hub directly on the back of the camera; it’s something that some customers have been asking for, and they see a benefit in it, so we’ve responded to their needs. Next, we’ve added a Timecode output to this new version. In the first version of the hub, tracking data could not be recorded natively. To reuse camera movement information, camera location data is required. Version 2 of the hub, which will be available by the end of June, will be able to record tracking information directly via the EZtrack interface, using two embedded Timecode and Sync/Genlock inputs embedded into the Hub. These inputs enable the EZtrack system to synchronize itself with the camera on the exact same timing to record images and camera tracking information. This feature is very useful in post-production for artists who need to compose 3D images according to real images filmed on set, using a “parallax effect” that is intended to be as realistic as possible: camera tracking information is then reused to precisely adjust the position of the 3D layer in relation to the camera’s movement at any given moment, and also in relation with the exact timing of the image displayed on screen. All these parameters need to be perfectly synchronized to produce a final image in which 3D and real sequences blend and overlap without visual artifacts.

You’ve created EZtrack Wiki, which provides free access to a database to run your system. Does it meet a need for training?

One of our objectives is to demonstrate the system’s user-friendliness. it’s a way of setting ourselves apart from the competition, who come out with beautiful products but can appear complicated to operate, especially when it comes to getting to grips with these new tools. With us, the learning curve is relatively quick. There’s a knowledge base open to all, with video tutorials and a detailed description showing how the system works. You can get to grips with it quickly and easily. We’re a small team, and we get a lot of requests from all over the world. So, for reasons of availability and productivity, we’re obliged to put the knowledge bases directly into the hands of users, without being too dependent on customer requests for assistance. The Wiki simply aims at making our registered users more independent more quickly.

You now offer your own tracker, called Swan… how did the R&D work go?

We called on two types of skills: electronics engineers for the tracker’s integrated electronics, and product designers to work on the shape of our newly developed SWAN camera tracker. Our R&D team validated the usage aspect against our expertise and background in camera tracking based on optical technologies

We don’t want to go beyond the Vive tracker, but the idea was to be able to offer a tracker with more advanced capabilities than the Vive. We gain on certain aspects linked to precision and stability, which is multiplied by three compared to version 3.0 of the Vive tracker. Because of the infrared signal technology, the Vive tracker generates residual noise, so we particularly wanted to attenuate this side effect linked to its on-board electronics. We wanted this generated noise to be significantly reduced, even if it can’t be entirely eliminated: to achieve this, we developed and integrated powerful algorithms into EZtrack for smoothing and also filtering any residual optical tracker noise: this is what we present as our “Clamp” filter.

This is an interesting year for our team! With these product launches, the idea is to be able to validate our very open approach to tracking, and to meet the needs of as many studios as possible with an affordable offer in terms of acquisition costs. Our aim is to provide highly tailored support service to our EZtrack registered customers: the service dimension is very key for us, and we continue to develop it and keep improving it over the time. Our distribution network is also growing-up on quarterly-basis across the globe. In the second quarter of 2022, we are developing the Indian and Japanese markets. There’s a strong concentration of creative teams in India. The same goes for Japan, where we’re seeing real, tangible developments and several studios implementation for the virtual production.

You have developed EZprofile, an optical calibration software. Once again, it’s clear that your aim is to offer something very didactic.

Yes, absolutely! It meets an important need on the part of virtual studios operators and cinematographers. We have acquired considerable expertise in all aspects of lens calibration. The principle: when tracking, we need to translate the physical characteristics of a lens into a digital version that can be read by the real-time 3D engine. How do you bridge the gap between the two? The solution is to perform lens profiling. This is the passage of the lens’ mechanical and physical characteristics into a digitized version that can be read by the engine. If we don’t have this, we’ll generate artifacts and imperfections in the curves of the image. When the camera moves, the 3D may tend to shift in the shot. When taking an image in augmented reality, the lens must be calibrated in relation to the motor, to avoid such artifacts. We started from the observation that there was very little on offer, but with the development of virtual studios, this need will logically grow. On the market, we’re competing with three main companies. Two of them offer very high-end, closed systems, and the third has an open but less powerful solution. We wanted something that was easy to operate and scalable. Today, EZprofile supports four real-time 3D rendering engines. Each lens profile can be finely edited with our software. The solution is available at a relatively affordable price. The license fee per PC station is 1,400 euros, whereas the existing competition can charge 3 to 4 times this price, without providing the native support of multiple 3D engines export for the generated lens profiles. EZprofile is completely independent from EZtrack, so it can be used with any tracking solution compatible with the FreeD protocol to benefit from the automatic calibration mode. Matching a quite reasonable license cost, studios can calibrate any type of lenses: Cine Prime lens, Hybrid zoom lens, ENG, EFP, and Broadcast zoom optics with embedded servo drives and lens encoders.

What are your future goals?

This is an interesting year for our team! With these product launches, the idea is to be able to validate our very open approach to tracking, and to meet the needs of as many studios as possible with an affordable offer in terms of acquisition costs. Our aim is to provide highly tailored support service to our EZtrack registered customers: the service dimension is very key for us, and we continue to develop it and keep improving it over the time. Our distribution network is also growing-up on quarterly-basis across the globe. In the second quarter of 2022, we are developing the Indian and Japanese markets. There’s a strong concentration of creative teams in India. The same goes for Japan, where we’re seeing real, tangible developments and several studios implementation for the virtual production.